Baidu Released China's First Cloud Full-Featured AI chip "Kunlun"

newsWednesday, 04 July 2018 at 06:29

Today at the Baidu AI Developers Conference, in addition to the official production of Apollo Program, Li Yanhong also released Baidu's self-developed China's first cloud full-featured AI chip – ‘Kunlun.’

Li Yanhong said that as China's first cloud full-featured AI chip, ‘Kunlun’ is by far the most powerful AI chip in the industry (providing 260 TOPS performance under 100+ watts of power consumption), which can efficiently meet both cloud and edge scenarios. In addition to supporting the common open source deep learning algorithms, Kunlun chip can also support a wide variety of AI applications, including voice recognition, search ranking, natural language processing, autonomous driving and large-scale recommendations.

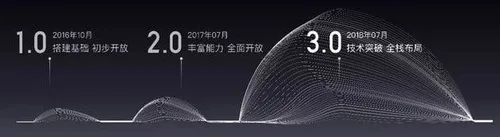

Back in 2011, Baidu started working on an FPGA-based AI accelerator for deep learning and began using GPUs in data centers. Kunlun is made of thousands of small cores. Thanks to them, it has a computational capability which is nearly 30 times faster than the original FPGA-based accelerator. Baidu brain has a more complete integration of software and hardware.

In his speech, Li Yanhong mentioned that with the rise of big data and artificial intelligence, the data that needs AI processing is almost doubled every 24 months. The complexity of constructing the model is five times that of the original. A chip that requires ten times more computing power can keep up with it. This increase in computing power requires algorithms, software, and hardware processes to work together.

Apart from this, Baidu Kunlun is based on a14nm Samsung engineering and 512 GB/second memory bandwidth. It leverages Baidu’s AI ecosystem, which includes AI scenarios like search ranking and deep learning frameworks like PaddlePaddle. The manufacturer is going to develop upon this chip, to enable the expansion of an open AI ecosystem. This simply means the upcoming models will focus on applications for intelligent vehicles, intelligent devices, voice recognition, and image recognition.

Bron: Anzhuo

Popular News

Latest News

Loading