From November 26 to 30, Amazon held the AWS re:Invent 2018 conference in Las Vegas. As the world’s largest public cloud vendor, AWS (Amazon Web Service) re:Invent conference every year will attract tens of thousands of developers and industry insiders from all over the world. At last year’s re:Invent 2017 conference, Amazon Cloud released 22 heavyweight products in one breath, and this year’s event was impressive as well. After launching the first self-developed ARM architecture cloud server CPU Graviton the day before yesterday, Amazon Cloud CEO Andy Jassy announced their first cloud AI chip, Inferentia.

With the launch of this machine learning chip, Amazon is entering the market where Intel and NVIDIA are dominants, hoping to improve profitability in the next few years. Amazon is the biggest buyer of Intel and NVIDIA, and the latter two companies’ chips help Amazon’s AWS cloud computing business. But Amazon is now starting to design its own chips.

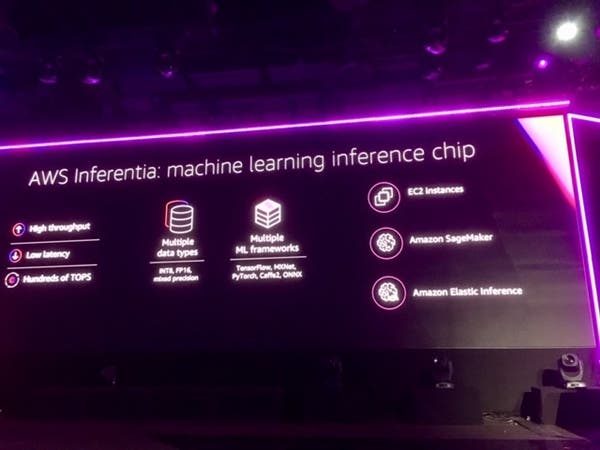

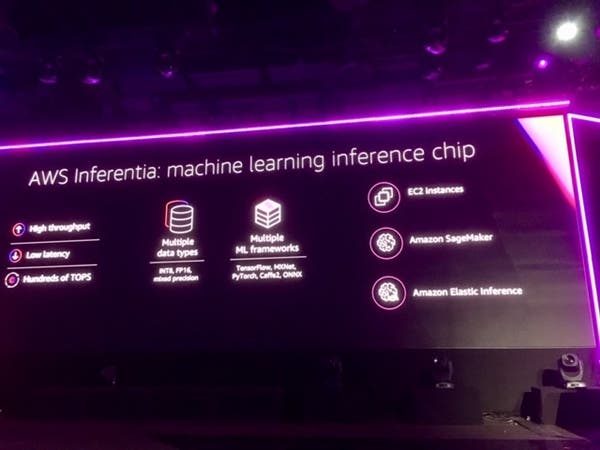

There are not many official announcements about the detailed parameters of the chip. Only some core selling points have been announced. For example, its computing power will be as high as several hundred TOPS, and the multi-chip combination can reach thousands of TOPS. In addition, Inferentia supports FP16, INT8 accuracy, and supports popular machine learning frameworks such as TensorFlow, Caffe2, and ONNX. The Inferentia will help researchers with the so-called inference process. For example, you can scan the input audio and convert it into a text-based request.

As a result, Amazon Cloud has become the third cloud service provider to launch cloud AI chips after Google and Huawei. But Amazon chips do not directly threaten the business of Intel and NVIDIA, because the company will not sell chips. However, cloud computing services sold by Amazon will start to use its own chips next year. If the company relies on a self-designed chip, it will cause NVIDIA and Intel to lose a big customer.

Intel’s processors currently dominate the machine learning inference market, and Morningstar analysts expect the market to reach $11.8 billion by 2021. In September this year, NVIDIA also launched its own inference chip to compete with Intel.

In addition to machine learning chips, Amazon also released a processor chip called Graviton for its cloud computing division. The chip uses the ARM technology. This architecture is currently used in smartphones, but many companies have tried to apply it to data centers, which may pose a challenge to Intel’s market dominance.

Amazon is not the only cloud computing vendor that designs its own chips. Google Cloud Computing also revealed in 2016 an artificial intelligence chip that competes with NVIDIA.

Custom chips are more expensive to design and manufacture, and analysts point out that such investments will help large technology companies to save on research and development spending.

Google’s cloud computing executives have said that customers’ demand for Google’s TPU custom chips is strong. But the chip is expensive to use and requires customization of the software. The Google cloud computing business charges $8 per hour for TPU chips, while the NVIDIA chip is only $2.48 per hour.