Every coin has two sides. This is also true for technologies. They come to ease our lives, to increase productivity and efficiency, to make things done faster, etc. But there also many people who prefer using these technologies for fun or, what’s worse, for misleading others. Now, AI helps them to do their dirty deeds with no efforts. Say, they create “deepfakes” by combining authentic images and videos.

People who like creating deepfakes may use them to disseminate misinformation. For example, they can show celebrities in fake situations or they can impersonate politicians and say practically anything. We remember there were cases when attackers used AI for creating fake satellite imaginary. These deepfakes were looking quite realistic and guided people to nowhere.

What’s more dangerous is that deepfakes go viral in most cases. They spread on social media like wildfire. And no one can calculate how much harm they bring. As Facebook is the most popular social platform, it should be in the forefront of fighting against deepfakes.

Facebook Can Detect Deepfakes

It turns out the company’s AI researchers have developed technology that can detect deepfakes and more. The company claims that its AI will track the origin of deepfakes. Then, it will find unique characteristics. And this will help it to identify the source of a deepfake.

In fact, Facebook hasn’t developed this technology independently. They joined hands with the Michigan State University to create technology, which is capable of realizing whether a video or image is a deepfake or genuine.

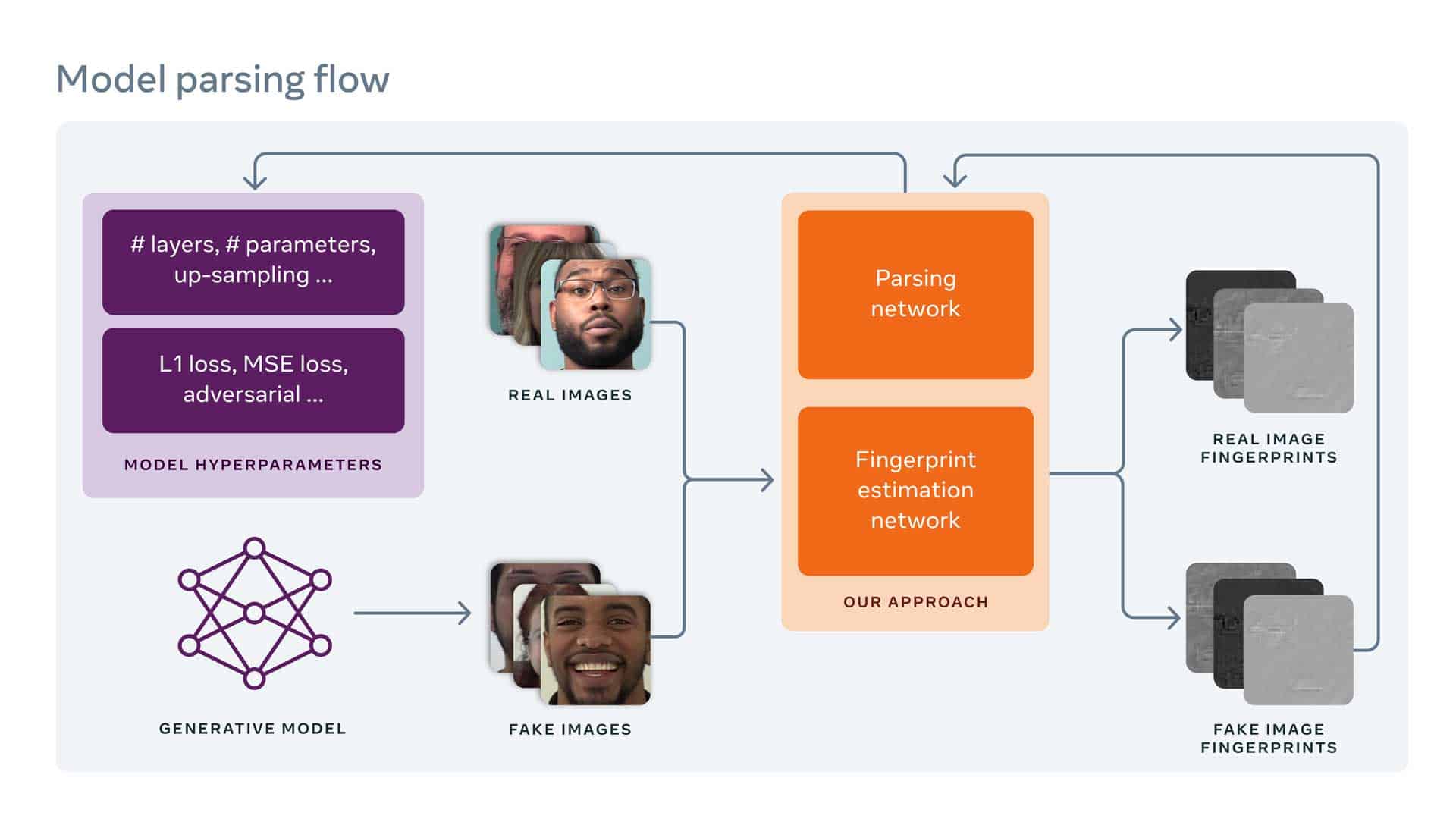

Our reverse engineering method relies on uncovering the unique patterns behind the AI model used to generate a single deepfake image. We begin with image attribution and then work on discovering properties of the model that was used to generate the image. By generalizing image attribution to open-set recognition, we can infer more information about the generative model used to create a deepfake that goes beyond recognizing that it has not been seen before. And by tracing similarities among patterns of a collection of deepfakes, we could also tell whether a series of images originated from a single source. This ability to detect which deepfakes have been generated from the same AI model can be useful for uncovering instances of coordinated disinformation or other malicious attacks launched using deepfakes.

Facebook Uses It In Other Spheres As Well

Interestingly, this algorithm lays in the roots of various technologies Facebook is currently using. Say, this technology allows recognizing the components of a car based on how it sounds. Even if you have never heard the car before, the algorithm will help you to identify it.

To understand hyperparameters better, think of a generative model as a type of car and its hyperparameters as its various specific engine components. Different cars can look similar, but under the hood they can have very different engines with vastly different components. Our reverse engineering technique is somewhat like recognizing the components of a car based on how it sounds, even if this is a new car we’ve never heard of before.