Over the years, Apple’s emphasis on user privacy protection is very high. Apple’s protection of user privacy is obvious to every Apple user. In fact, at some point, the privacy thing sometimes becomes a menace to users. Apple’s privacy protection is so strong that back in 2015, the company refused the FBI access to a dead terrorist’s Apple mobile phone.

In September 2020, Apple even released an ad-Over Sharing, to introduce consumers to the iPhone’s privacy protection. The video described people embarrassingly sharing “personal information” with strangers. This information includes credit card numbers, login details, and web browsing history.

Apple said: “Some things shouldn’t be shared, that’s why the iPhone is designed to help you control information and protect privacy”. The company has repeatedly believed that user privacy is a “basic human right” and the company’s “core values”. At the 2019 CES show, the company even contracted a huge advertising space for a hotel. The advertisement says “What happens on your iPhone stays on your iPhone”. This huge advertisement expresses Apple’s importance to user privacy.

In all honesty, many Android users admire Apple’s level of privacy protection. However, the big question is “will Apple continue with this level of privacy protection?” The answer is NO.

Apple will scan multimedia for Child Sexual Abuse Content

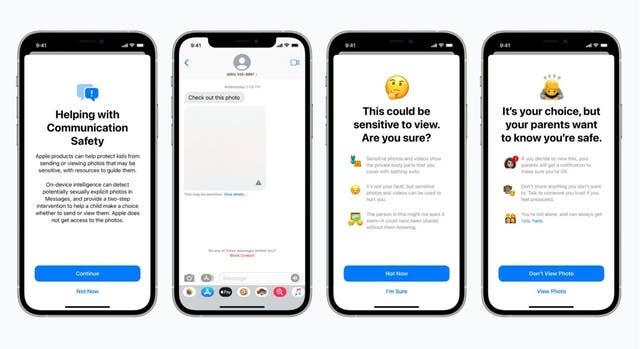

Just a few days ago, Apple announced a new technology that broke the so-called emphasis on user privacy that it has been insisting on. The company recently released a new end-to-side tool for scanning and detecting “Child Sexual Abuse Content”. What is end-to-side scanning? It means that the scanning will be done on the user’s device. In order to complete the detection of “child sexual abuse content”, iOS and iPadOS will have a new technology that allows the company to detect the hash value of images/videos on its devices. Whether you are sending or receiving, Apple will be able to detect “child sexual abuse content”.

Apple will then compare the extracted hash value with the hash database of known child sexual abuse images provided by the National Center for Missing and Exploited Children (NCMEC) and other child security organizations.

In order to verify whether certain photos or pictures belong to child sexual abuse content, Apple stated that this database will be converted into a set of unreadable hashes, stored safely in the user’s device. Thus, when users receive or send photos on iMessage, the system will also complete monitoring of the photos.

If the system considers this photo to be an explicit photo, it will be blurry, and iMessage will warn the child and reconfirm whether the child wants to continue browsing or send the explicit photo. In addition, if the child previews or sends explicit photos again, the APP will automatically notify the parents.

Apple will also scan iCloud images

The American manufacturer will also use a similar algorithm to detect photos sent by users to iCloud. Before uploading to the server, it will perform a series of encryption work on the files. If after uploading the photos to iCloud and completing the hash value comparison, Apple believes that the photos contain child sexual abuse content, Apple’s servers will decrypt the photos and manually check them.

Some experts believe that Apple’s original intention of proposing the CSAM program is good. They also believe that the crackdown on such illegal acts is absolutely just. However, this whole set of solutions bypasses the end-to-end encryption solution originally designed to enhance user privacy. This is obviously a backdoor that could pose serious security and privacy risks.