Facebook News Feed Has Been Displaying Misinformation Instead Of Blocking It

newsFriday, 01 April 2022 at 07:26

Yesterday, The Verge reported that over the past six months, there was a “massive ranking failure” in Facebook’s algorithm, which caused potential “integrity risks” for half of all News Feed views.

The information comes from a group of engineers, who found the algorithm failure last October. They said that when a batch of misinformation was flowing via the News Feed, instead of blocking spreading, the system was promoting them. In effect, misinformation that appeared on the News Feed got 30% more views. What’s strange, Facebook couldn’t find the root cause. They were only monitoring how such posts were acting. Interestingly, such news didn’t get so many views after a few weeks of being on the top. Facebook engineers could fix the bug on March 11.

Facebook’s internal investigation showed that Facebook’s algorithm couldn’t prevent posts from being displayed even if they were consisting of nudity, violence, etc. internally, the bug got a SEV level. SEV stands for site event. However, this is not the worst that can happen with the system. Though it’s a level-one bug, there is also a level-zero SEV used for the most dramatic emergencies.

Facebook has already officially admitted that there had been a bug. Meta spokesperson Joe Osborne said that the company “detected inconsistencies in downranking on five separate occasions, which correlated with small, temporary increases to internal metrics.”

According to the internal documents, this technical issue was first introduced in 2019. However, at that time, it hadn’t a noticeable impact until October 2021. “We traced the root cause to a software bug and applied needed fixes,” said Osborne. “The bug has not had any meaningful, long-term impact on our metrics.”

Downranking As The Best Way To Display Content You Need

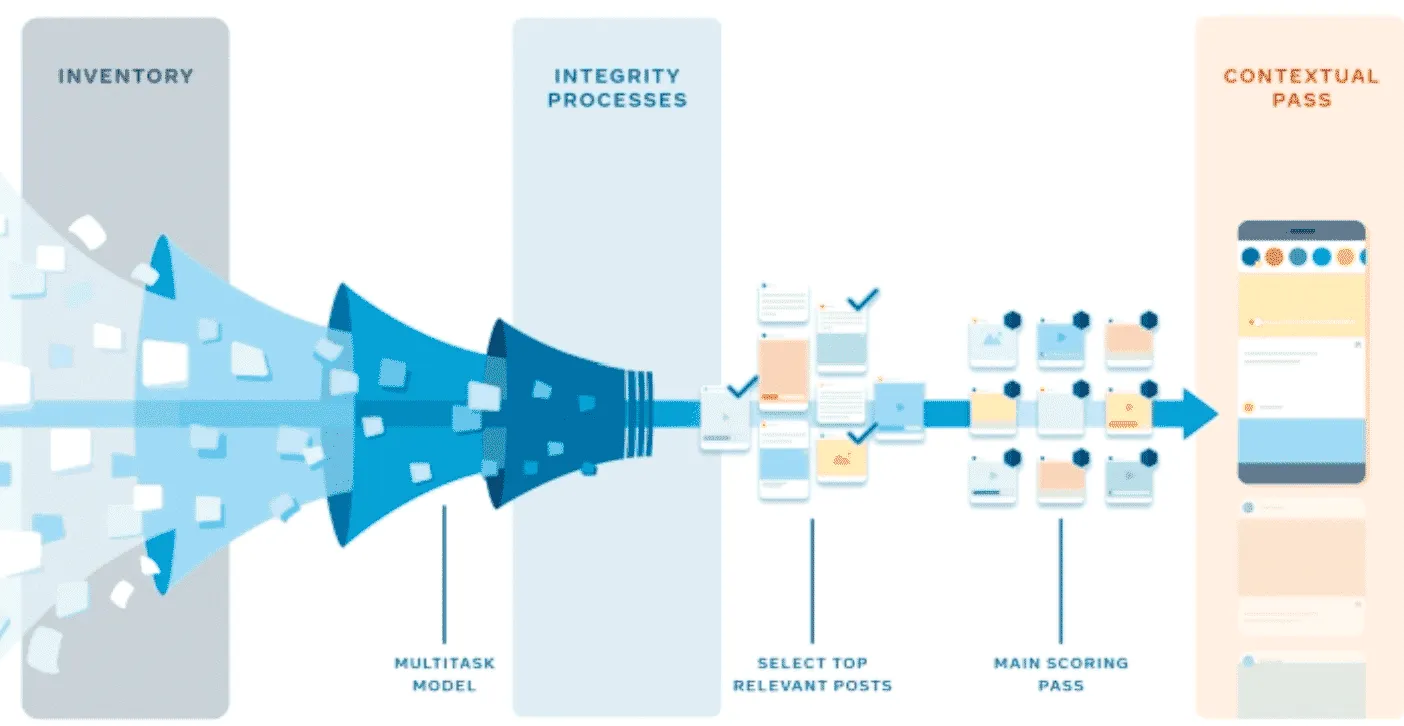

For the time being, Facebook hasn’t explained what kind of impact it has on the displayed content in the News Feed. We just know that downranking is the way that the Facebook algorithm uses to improve the quality of the News Feed. Moreover, Facebook has been actively working on this approach, making it the primary way of displaying content.

In some sense, this is a logical approach. We mean posts about wars and controversial political stories could have high rankings. But in most cases, they do not match the terms of Facebook and could be banned. In other words, as Mark Zuckerberg explained back in 2018, downranking struggles against the instinct of being obsessed with “more sensationalist and provocative” content. “Our research suggests that no matter where we draw the lines for what is allowed, as a piece of content gets close to that line, people will engage with it more on average — even when they tell us afterwards they don’t like the content”.

Downranking “works” for the so-called “borderline” content too. Thus, Facebook doesn’t show not only content that is close to violating its rules but also content that is determined as violating. Facebook's AI system checks all posts. However, later, corresponding employees check the “violating” content themselves.

Last September, Facebook shared a list of downranked content. But it didn’t provide any info on how demotion impacts the distribution of affected content. We hope that in the future, Facebook will be more transparent when it comes to downranked content on News Feed.

Does Your News Feed Showing Content You Like?

At the same time, Facebook doesn’t miss out on an opportunity to show off how its AI system works. They prove that the algorithm is getting better each year and successfully fights against content including hate speech and the likes. Facebook is sure technology is the best way for moderating the content to such an extent. For instance, in 2021, it officially announced to downranking all political content in the News Feed.

Many would confirm that there hasn’t been malicious intent behind this recent ranking. But Facebook’s report shows that any web-based platform and the algorithms they use should be as transparent as possible.

“In a large complex system like this, bugs are inevitable and understandable,” said Sahar Massachi, a former member of Facebook’s Civic Integrity team. “But what happens when a powerful social platform has one of these accidental faults? How would we even know? We need real transparency to build a sustainable system of accountability, so we can help them catch these problems quickly.”

Popular News

Latest News

Loading