Robots are now everywhere, and they also appear in factories around the world. However, these robots can only follow specific instructions and focus on completing simple pre-programmed tasks. However, Google robots seem to be different, they can take simple commands and perform more complex tasks. According to reports, the available Google robots look more like humans.

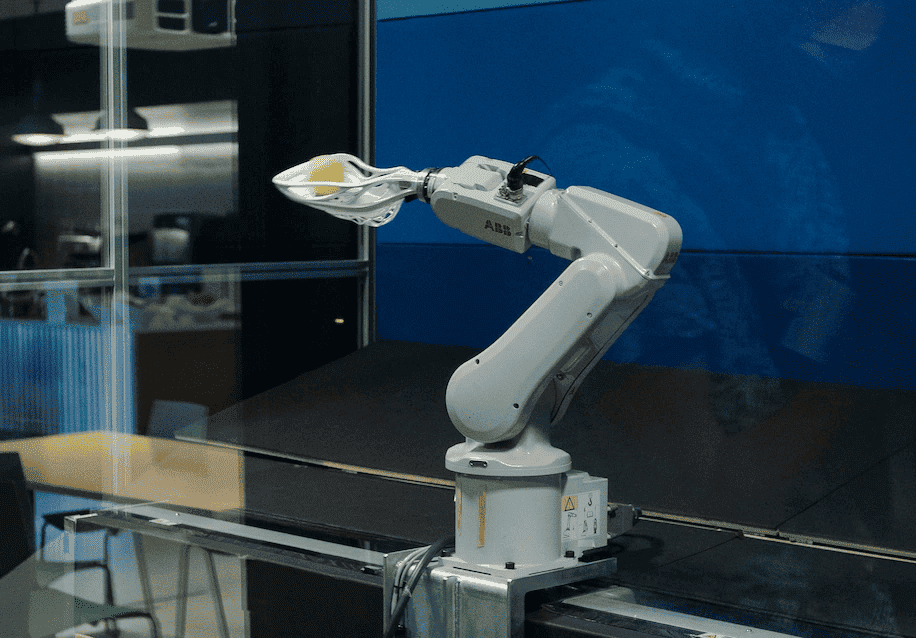

Researchers at Google Labs recently demonstrated new robotic skills, making burgers out of a variety of plastic toy ingredients. The robot understands the cooking process and knows to add ketchup after the meat and before the lettuce. However, it thinks the correct way to do it is to put the entire bottle in the burger. While the Google robot won’t be a smart cook anytime soon, it represents a major breakthrough that Google claims.

Using recently developed artificial intelligence (AI) software known as large language models, they have been able to design robots that can help humans with a wider range of everyday tasks, the researchers said. Instead of taking a series of disaggregated instructions and then guiding them through each action one by one, such robots can now respond to complete commands, much more like humans.

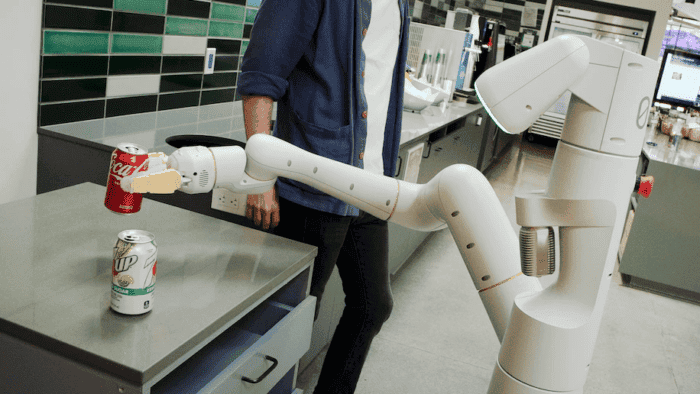

During last week’s demo, Google researchers told the robot, “I’m hungry, can you get me a snack?” The robot then began searching the cafeteria, opening a drawer, finding potato chips, and bringing them to humans. Google executives and researchers say this is the first time a language model has been integrated into a robot. “It’s fundamentally a very different model for training robots,” said Google research scientist Brian Ichter.

Google robots will perform complex tasks

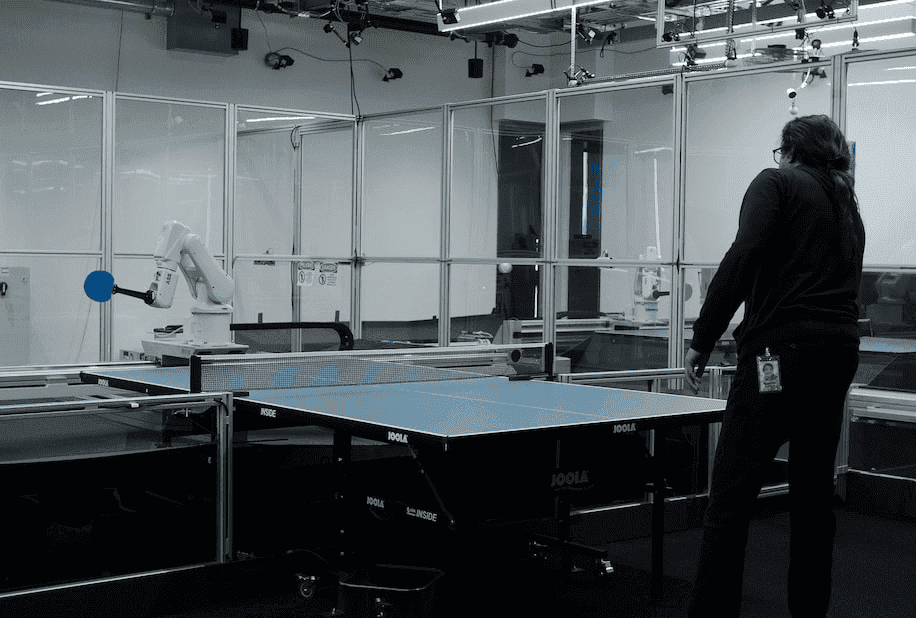

Robots on the market today typically focus on only one or two tasks, such as moving a product on an assembly line or welding two pieces of metal together. Developing robots that can perform a range of everyday tasks and learn autonomously on the job is much more complex. Tech companies large and small have been working to build such general-purpose robots for years.

Language models work by taking large amounts of text uploaded to the internet and using them to train AI software to guess what kind of response is likely to come after certain questions or comments. These models have become so good at predicting the correct responses that when dealing with people, it often feels like talking to an informed person. Google and others, including OpenAI and Microsoft, have invested significant resources in building better language models and training them.

However, this work has a lot of controversies. In July, Google fired an employee for claiming that Google robots have human perception. The consensus among AI experts is that these models are not perceptive, and many fear they will exhibit bias. Some language models exhibit racist or sexist tendencies or are easily manipulated to say hate speech or lies. In general, language models allow robots to understand more advanced planning steps, but don’t give robots all the information they need, says Deepak Pathak, an assistant professor at Carnegie Mellon University.

Still, Google is moving forward and has integrated language models with many of its bots. Now, instead of writing specific technical instructions for every task the robot can perform, researchers can simply talk to the robot in everyday language. What’s more, the new software helps the robot interpret complex, multi-step instructions on its own. Today, robots can interpret commands they have never heard before, give meaningful responses and take action.

Robots will take and create jobs

Robots that can use language models could change the way manufacturing and distribution facilities operate, said Zac Stewart Rogers, assistant professor of supply chain management at Colorado State University. “Humans and robots working together have always been hugely efficient. Robots can lift heavy objects manually, and humans can do nuanced troubleshooting,” he said.

If robots can handle complex tasks, that could mean smaller distribution centers, requiring fewer humans and more robots. It could also mean fewer jobs for people. Although Rogers points out that typically when one area shrinks due to automation, other areas create more jobs.

Training general-purpose robots may still be a long way off. AI techniques such as neural networks and reinforcement learning have been used to train robots for many years. While many breakthroughs have been made, progress is still slow, and Google robots are far from ready for real-world service. Google researchers and executives have repeatedly said they are just running a research lab and have no plans to commercialize the technology.

But it’s clear that Google and other big tech companies have a serious interest in robotics. Amazon, which uses a number of robots in its warehouses, is experimenting with drone deliveries, and earlier this month agreed to buy the maker of Roomba robots for $1.7 billion. Tesla says it’s building friendly robots that can perform everyday tasks and won’t fight back. In addition, Tesla has developed several self-driving features for its cars, and the company is also working on general-purpose robots.

Google invests heavily in robotics companies

In 2013, Google began investing heavily, acquiring several robotics companies, including Boston Dynamics, which often sparked social media buzz. But the executive in charge of the project was accused of sexual misconduct and left the company shortly after. In 2017, Google sold Boston Dynamics to Japanese tech giant SoftBank. Since then, the hype around increasingly intelligent robots designed by the most powerful tech companies has cooled.

On the language model project, researchers at Google collaborated with the Everyday Robots team. Everyday Robots is a Google-owned but independently operated company that specializes in building robots that can perform a range of “repetitive and tedious” tasks. The robots are already working across Google’s cafeterias, cleaning counters and trash.