Google AI may have many positives to its workability but there are also some negatives. This reflects in the recent regular complaints of users being flagged for doing “nothing”. A full-time dad in San Francisco was banned from Google because he used his Android phone to take pictures of his son’s groin infection in order to inform doctors about his child’s condition. As a result, Google AI flagged the photos as Child Sexual Abuse Material (CSAM). He immediately appealed the decision but Google not only refused ruthlessly but also flagged other videos and photos of him. For this reason, the San Francisco Police Department started an investigation.

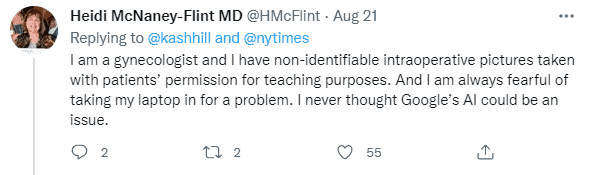

There has been a long discussion on this issue on Twitter. While some claim that this is an invasion of privacy, others say they would rather be strict than let go. A gynaecologist expressed worry about some images that he needs for his work.

A “case” caused by an online medical consultation

According to reports, the parents were worried about their son’s swollen groin and unbearable pain. Before receiving the video medical consultation the next day, the wife took several high-definition close-ups of her son’s groin infection. The images were eventually uploaded to the medical insurance system according to the nurse’s suggestion. In order to facilitate the doctor’s preliminary diagnosis based on the photos, the father pointed to his son’s discomfort with his finger when his wife took pictures. With the help of the photos, the doctor diagnosed and prescribed antibiotics.

The son’s illness was resolved, but the father’s troubles came. Within two days, the father’s Google account was disabled for “harmful content” that “severely violates Google’s policies and may be illegal. “Harmful content” includes “child sexual abuse and exploitation”. Probably, Google AI considers the images as child porn, hence the ban.

More catastrophic things are yet to come. The father (Mark) is a heavy user of Google, relying on his Gmail account for everything from syncing day plans to back up photos and videos. But because of this ridiculous oolong, the domino effect came one after another – he was blocked by Google from the entire network. He lost access to his email, contacts, photos, and his Google Fi account was closed (meaning he had to go to another carrier to get a new phone number). Going through the legal process to sue will cost about $7,000. “emm…I don’t think it’s worth $7,000,” Mark said.

Google AI and manual review still banned the account

However, Mark used to work at a major tech company as a software engineer for an automated tool used to remove video content flagged by users as problematic. He knows that to make sure the AI doesn’t make mistakes, there’s usually a human review process. Therefore, in the beginning, Mark was not so worried. He felt that as long as he entered the manual review stage, he would be able to retrieve his account. Otherwise, he will be “eternally separated” from his previous digital life. He filled out an application, explained his son’s infection, and asked Google to review the decision.

A few days later, the Google censorship team responded that the account would not be reinstated. In fact, they went ahead to quietly flag other videos and photos of him. To make matters worse, the San Francisco Police Department has begun investigating him. Funny though, because Google Fi and Gmail are disabled, the police can’t reach Mark.

After extensive investigations, the police concludes that this did not constitute child abuse or exploitation. But the police couldn’t communicate directly with Google to show Mark’s innocence. Mark later provided a police report and appealed to Google again, to no avail. Coincidentally, the same drama unfolded in Texas the day after Mark’s account was disabled.

Another similar case

According to the request of the paediatrician, another child’s father used an Android phone to take pictures of the infection of his baby’s “visceral parts”. The pictures were automatically backed up to Google Cloud, and then he used Google Chat to send the pictures to the child’s mother. The results were strikingly similar, and his Gmail account was also disabled. He almost couldn’t buy a house because he didn’t have an email address.

Houston police invited him to the station for a cup of coffee, and he was quickly released from the station after showing a chat log with the paediatrician. The police found him innocent, but Google didn’t. Even though he is a 10-year-old Google user with a paid account, the account still can’t be recovered. Now, the kid’s dad has to send emails from a Hotmail address and is mercilessly mocked by his friends.

Google AI flags child sexual abuse millions of times a year

In 2021, Google filed more than 620,000 reports of alleged child abuse. The company had to disable the account of more than 270,000 users. It also alerted authorities to 4,260 potential child sexual abuse victims. Of course, this includes the two dads who were wronged above. Tech giants that collect vast amounts of data act as gatekeepers, checking content to monitor and prevent crime. Each year, they flag images of children being exploited or sexually abused millions of times. But content inspection may require peeking into private archives, such as peeking at your digital photo albums. The two dads just didn’t expect this, so “seeking medical attention” became “child sexual abuse” on Google.

Tools for checking contents

When checking, technology giants generally use two tools: One is PhotoDNA released by Microsoft in 2009.

It has established a database of child abuse photos and compared the photos in each person’s electronic album with the photos in the database. Even if the photos are slightly different, it can find users who spread child abuse images. The likes of Facebook, Twitter and Reddit, all use PhotoDNA.

The other is the Developer Toolkit Content Safety API released by Google in 2018. It uses deep neural networks to process images, allowing censors to process 700% more child abuse images in the same amount of time. Google uses this AI toolkit itself and also open-sources it to Facebook and others.

The general review process for this AI toolkit is as follows:

- Photos of the two dads are automatically backed up to Google Cloud and flagged for manual review

- Human review to confirm that these photos meet the definition of child abuse imagery

- Lock the user account and continue searching for other child abuse images in the account

- Report to the CyberTipline of the National Center for Missing and Exploited Children (NCMEC)

Google AI toolkit has demonstrated an amazing ability to identify image content. CyberTipline will receive about 80,000 reports per day in 2021. These will be reviewed by 40 analysts so that NCMEC can further report to the police.

The CyberTipline director said it was “the system working properly”. But this is undoubtedly a double-edged sword. After all, if the circumstances are serious, even if the parents are innocent, they may lose custody of their children.