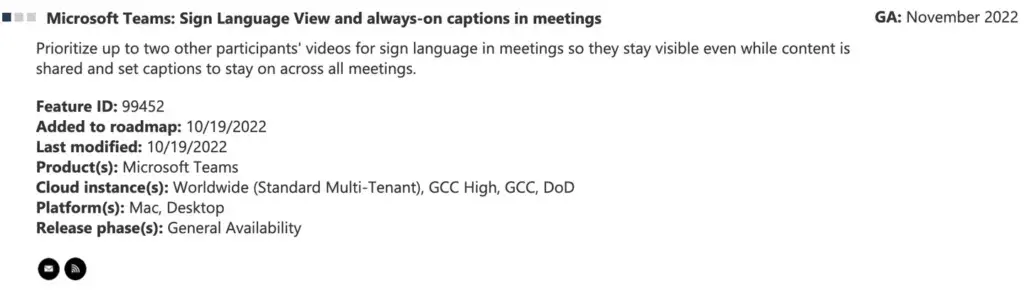

In the past few months, Microsoft has added several useful features to Teams. However, the company claims that it will bring more features in the future. Microsoft is working on bringing some accessibility features to hearing-impaired customers. These new features will to help them participate in Teams meetings. Microsoft plans to add sign language views and always-on captions to its Teams conference next month. The roadmap page of Microsoft 365 reveals some details. The page shows that the company will “prioritize sign language video for up to two other participants in meetings”. These videos will be visible even when others share the video.

Microsoft didn’t explain how the feature would work on Teams. Its major rival, Zoom already supports sign language interpretation in meetings and webinars. In Zoom, hosts of meetings and webinars can designate multiple participants as interpreters for sign language channels. This allows participants to choose from multiple sign language interpreters. This feature will be available from November this year. However, there are reports that it could face some delays.

Microsoft Teams uses AI to improve network call quality

Microsoft Teams is one of the most used online collaboration tools in the workplace. The company regularly makes improvements to the Teams software. Now, Microsoft has detailed a new artificial intelligence (AI)-driven initiative. This initiative could make Teams calls sound better, especially in poor network conditions. The audio content of a user’s Microsoft Teams call is sent in what are essential “packets”. And these packets may be lost due to poor network connection, resulting in distorted audio.

While Microsoft can’t really fix users’ internet connectivity issues, the company leverages “packet loss concealment” (PLC) technology in Teams. Thus, it can artificially make users’ voices sound better in challenging network environments. At a very high level, PLCs use artificial intelligence models to identify lost packets in audio transmissions. It can then make predictions to fill in any gaps.

Microsoft said it used deep learning techniques to accomplish this improvement. The traditional model can fill in the 20-40ms gap. However, its PLC model can fill in the audio gap up to 80ms. Microsoft trained the PLC model on 600 hours of open-source data. It also collected “millions of anonymous network samples,” or “traces” from real Teams calls. After the PLC model was released, there was a 15% performance improvement. This is basically for people in poor network environments.