Google Makes a Breakthrough With an Improved Quantum Processor

GoogleThursday, 23 February 2023 at 02:13

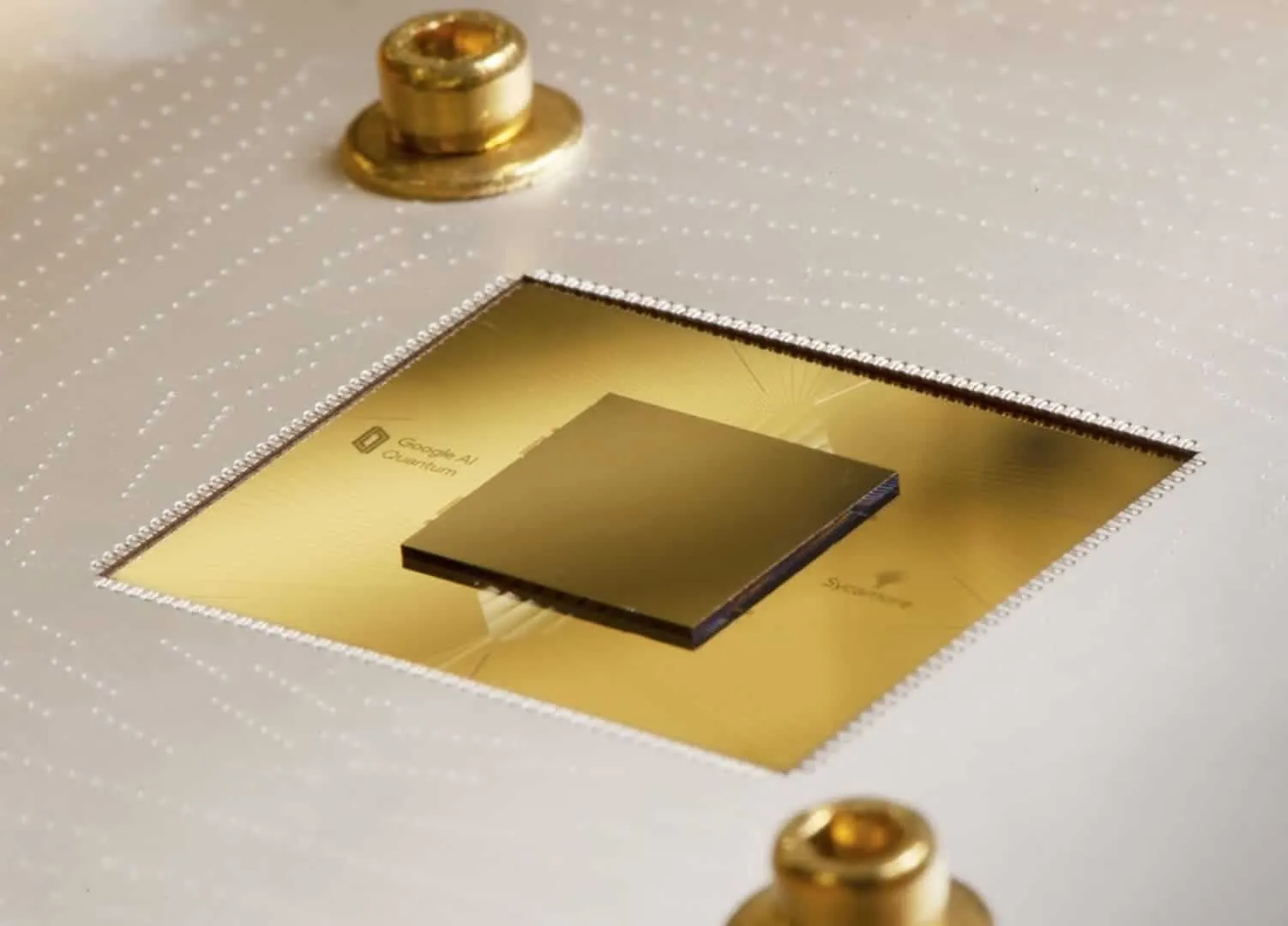

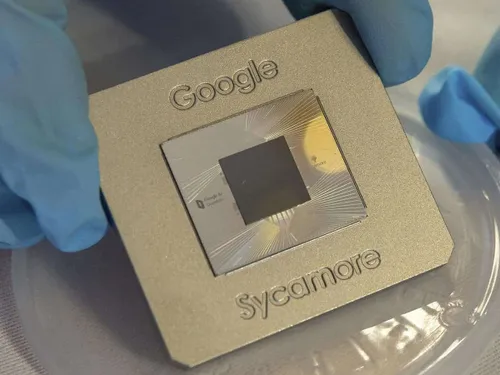

Google has just announced its next generation of quantum processors. Named Sycamore, the processors are capable of correcting quantum errors. And the demonstration the tech giant has announced is truly a breakthrough in quantum computing.

So, the iteration of the Sycamore is not really that dramatic. It has the same number of qubits as the earlier version. However, it has improved a lot in terms of performance. And error correction is not even the main highlight of the announcement. Google claims that it managed to get the processor to work years ago!

Google Shows Signs of Progress With Sycamore

Basically, the signs of progress with Sycamore are not that noticeable. Instead, it is a bit more subtle. In the earlier generation of processors from Google, qubits were error-prone. In fact, the error-prone nature was very extreme. That is, integrating more of them into an error-correction scheme caused even more problems.

In other words, the gain in corrections was significantly lower for the previous quantum processors. But with the new iteration, quantum team of Google managed to get the error rate to go as low as possible. And adding more qubits now results in better gains.

The Problems that Google Addressed

Assuming that all the qubits are behaving as they should, it is thought that you can perform calculations correctly and more efficiently with more qubits. In other words, the more qubits that a machine has, the more capable it will be. But, in general, qubits do not behave as intended. And that is basically Google’s main priority.

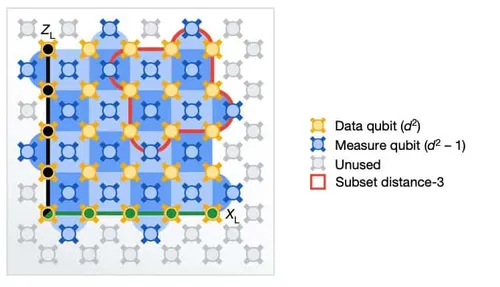

The solution to this issue is to create an error-corrected logical qubit. And it involves the process of distributing a quantum state among all the qubits. That is, error correction can be enabled with additional qubits that neighbor each of the members of the logical qubit.

So, Google mainly acknowledges two main problems. First is that there are not enough hardware qubits to spare. The second issue is that the error rates of the qubits are reasonably higher than expected. That makes them not suitable for doing any reliable work.

In short, adding existing qubits to a logical qubit does not actually make a system robust. Instead, it makes the system more likely to have many errors. And all of these errors can come up at once, making correcting them very challenging.

The Same but Different

Google has responded to the issues mentioned above. Basically, its response was to build a new generation of its Sycamore processor. It has the layout of hardware qubits at the same amount. In other words, the specs of the new quantum processor did not really see an upgrade.

Instead, Google focused on lower the error rate for all the qubits. The error rate reduction aimed to make each qubit more capable of handling complicated operations. That, too, without experiencing any failure. Here’s a quick look at the hardware that Google utilized to test the error-correction capabilities of the logical qubits:

The published paper describes two different methods. In both cases, the data was in a square grid of qubits. They each had neighboring qubits that Google utilized to measure to implement error correction. The only difference that the methods had was the sizes of the grid.

The research team of Google also performed different measurements to calculate the performance. But the key goal was simple. That was to find whether the qubits had a lower rate of errors. And from that, it seems that the larger scheme won. But the difference was not that significant.

In comparison, the larger logical qubit had an error rate of 2.914%, while the smaller one had 3.028%. Although this difference might seem small, Google did actually make proper advancements in the quantum world.

Loading