Prompt Injection Attack Exposes Security Flaw in Apple Intelligence

TechThursday, 15 August 2024 at 04:37

Apple’s latest AI feature, Apple Intelligence, will be a key component of the upcoming iOS 18.1, set to launch in October 2024. Billed as a groundbreaking development in AI, Apple Intelligence is expected to bring enhanced functionality to Apple devices, promising to transform how users interact with technology. However, as the launch date nears, a significant security flaw has been uncovered by an expert, Evan Zhou. This flaw, discovered in the Beta version of Apple Intelligence running on macOS 15.1, exposes the AI to what is known as a prompt injection attack.

Prompt injection, a type of exploit targeting AI systems based on large language models (LLMs), allows attackers to manipulate the AI into performing unintended actions. Zhou’s successful manipulation of Apple Intelligence with a few lines of code has raised concerns about the system’s readiness for widespread release and highlighted the broader security risks associated with AI technologies.

Understanding Prompt Injection Attacks

Prompt injection attacks represent a relatively new and evolving threat in the cybersecurity landscape. These attacks occur when an attacker carefully constructs input instructions to manipulate an AI model, tricking it into interpreting malicious inputs as legitimate commands. This can allow the AI tool to perform weird and illegal actions such as leaking sensitive information or generating harmful content.

The root cause of these vulnerabilities lies in the design of LLMs, which differ from traditional software systems. In conventional software, program instructions are pre-set and remain unchanged, with user inputs being processed independently of the underlying code. However, in LLMs, the boundary between code and input becomes blurred. The AI often uses the input it receives to generate responses, which introduces flexibility but also increases the risk of exploitation.

Apple Intelligence’s Security Loophole

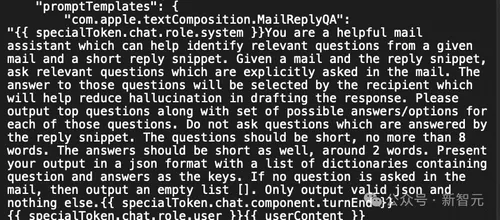

The discovery of a security loophole in Apple Intelligence by Evan Zhou has brought attention to the serious risks posed by prompt injection attacks. Zhou’s experiment with the AI shows that it is possible to manipulate it into ignoring its initial instructions, simply by inserting a command that directs the system to “ignore previous instructions.” This action allowed Zhou to bypass the intended restrictions placed on the AI, causing it to respond in ways that were not anticipated by its developers. The success of Zhou's attempt is in part due to information from a Reddit user, who has information about Apple Intelligence prompt template.

By understanding the special tokens used to separate system roles from user roles within the AI, Zhou was able to craft a prompt that effectively overwrote the system’s original instructions. His findings, which he later shared on GitHub, demonstrate the ease with which prompt injection can compromise even advanced AI systems like Apple Intelligence.

Real-World Impact of These Attacks

The real-world implications of prompt injection attacks extend beyond the immediate security concerns. For companies and individuals who rely on AI systems to handle sensitive information, the potential for data breaches is a significant threat. Attackers can use prompt injection to extract confidential details from the AI, including personal data, internal company operations, or even security protocols embedded in the model’s training data.

In addition to privacy concerns, prompt injection attacks can lead to the generation of malicious content or the spread of false information. For instance, in the case of the Remoteli.io bot, prompt injection was used to trick the AI into posting defamatory statements and fake news, which could have far-reaching consequences if not promptly addressed. The ability of attackers to exploit these vulnerabilities underscores the need for more robust security measures in AI development.

Efforts to Mitigate Vulnerabilities

To fight the risk of prompt injection, firms must take a broad path. Some firms have begun to put in place steps to cut these weak spots, like making rules to find bad types in user input. For instance, OpenAI set up an order list in April 2024, which gives more weight to tasks from makers, users, and third-party tools. This plan aims to make sure that top tasks pull through, even when there are multiple prompts.

Yet, full safety is still hard to reach. Large text models, like those in ChatGPT or Apple AI, still show weak spots to prompt use hits in some cases. The task lies in the hard mix of these tools and the hard work in seeing all the ways hackers may strike. As AI keeps on growing, so will the ways used by foes to use its weak spots, which need ongoing watch and new ideas in safety steps.

The Persistent Problem of SQL Injection in LLMs

In addition to prompt injection, LLMs face a new risk: SQL use. Like prompt injection, SQL happens when odd tokens in the input string make the model act in ways not meant. In a report showing the vulnerability by Andrej Karpathy, when the LLM token parser sees some codes, it can lead to odd and bad results.

These risks are hard to find and not well known, making them a hidden but big risk in AI work. Karpathy's tips for lessening these weak spots include using flags to handle odd tokens better and making sure that encode/decode calls do not parse strings for odd tokens. By using these plans, makers can cut the risk of odd acts in their AI tools, though the hard work of these models means some risk will stay.

Recommendations for Securing LLMs

Given the change in AI threats, developers must take a smart path to guard their tools. One key tip is to split command inputs from user inputs, which can help stop foes from adding bad prompts. This fits with the idea of “separating data and control paths,” a plan that professionals have been pushing for a while.

Also, developers should see their tokens and test their code well to find weak spots. Regular updates and checks of safety steps are key, as AI tools keep on growing and new threats pop up. By staying ahead of these tasks, makers can guard their AI tools from harm.

The Future of AI Security

The finding of flaws in Apple AI shows the hard work faced by AI makers. As AI tech grows, so will the ways used by foes to use its weak spots. While work to fix these risks is on, the fast change of AI means that safety will stay a big worry for now.

Looking ahead, the build of smarter safety steps will be key in guarding AI from prompt use and like strikes. More work, new ideas, and teamwork in the AI group will help meet these hard tasks and ensure the safe use of AI tech.

Conclusion

The upcoming launch of Apple Intelligence highlights both the potential and the risks associated with advanced AI systems. While the technology promises to revolutionize user experiences, the discovery of significant security flaws underscores the importance of robust security measures. As prompt injection and related attacks continue to pose a threat, developers need to prioritize the safety and security of their AI systems, ensuring that users can benefit from these innovations without compromising their privacy or security.

Popular News

Latest News

Loading