OpenAI made several announcements today at its Developer Day event in San Francisco, introducing new updates to its API services. These updates aim to give developers more control over AI models, improve speech-based applications, reduce the cost of repetitive tasks, and enhance the performance of smaller models.

The event featured four key API updates: Model Distillation, Prompt Caching, Vision Fine-Tuning, and a new service called Realtime. For those unfamiliar, an API (Application Programming Interface) allows software developers to integrate features from one application into another.

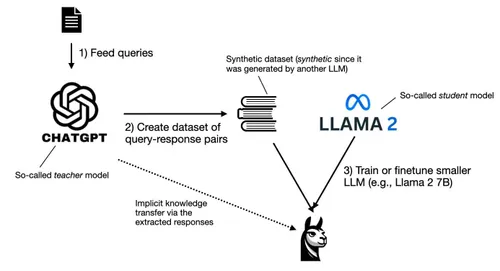

Model Distillation

One of the big announcements was a new feature called Model Distillation. This process allows smaller models, like GPT-4o mini, to perform better by learning from larger models. Previously, distilling models was a complex task that involved many manual steps. Developers had to use various tools to generate data, fine-tune models, and check how well the smaller models were performing.

Now, OpenAI has simplified this process by building a Model Distillation suite within its API platform. Developers can use it to create datasets using advanced models like GPT-4o and o1-preview, train smaller models to respond in a similar way, and then run tests to measure the model’s performance.

To help developers get started with this new feature, OpenAI is offering two million free training tokens per day on GPT-4o mini and one million free tokens per day on GPT-4o until October 31. Tokens are units of data that AI models process in order to understand and respond to prompts. After this period, the cost of using a distilled model will match OpenAI’s regular fine-tuning prices.

Prompt Caching

In its effort to reduce API costs, OpenAI also introduced Prompt Caching. This feature allows developers to save money when they reuse similar prompts multiple times.

Many applications that use OpenAI models include long “prefixes” in front of prompts. These prefixes help guide the AI to respond in a certain way, such as using a friendly tone or formatting responses in bullet points. While these prefixes improve the AI’s performance, they also increase the cost per API call since the model processes more text.

With Prompt Caching, the API will now automatically save these long prefixes for up to an hour. If a prompt with the same prefix is used again, the API will apply a 50% discount to the input cost. This could lead to significant savings for developers who use the API frequently. OpenAI’s competitor, Anthropic, added a similar feature to its own models back in August.

Vision Fine-Tuning

Another major update is the ability for developers to fine-tune GPT-4o using images, not just text. This enhancement will make the model better at understanding and analyzing images. This feature could be particularly useful for tasks like visual search, object detection in autonomous vehicles, and medical image analysis.

To fine-tune the model with images, developers can upload labeled image datasets to the OpenAI platform. OpenAI says that Coframe, a startup focused on AI-powered web development tools, has already used this feature to improve their model’s ability to generate website designs. By training GPT-4o with hundreds of images and corresponding code, they saw a 26% improvement in the model’s ability to create consistent visual layouts.

During October, OpenAI will provide one million free training tokens per day to developers for fine-tuning GPT-4o with images. Starting in November, the service will cost $25 per million tokens.

Realtime

OpenAI also introduced a new API called Realtime, which allows developers to build speech-based applications that process and respond to audio in real-time.

Previously, if a developer wanted to create an AI app that could talk to users, they had to follow several steps. First, they needed to transcribe the audio into text, send it to a language model like GPT-4 for processing, and then convert the text back into speech. This process often resulted in delays and loss of important details like emotion and emphasis.

With the Realtime API, the entire process is streamlined. Audio is processed directly by the API, making it faster, cheaper, and more accurate. Additionally, the Realtime API supports function calling, meaning the AI can take real-world actions, such as placing orders or scheduling appointments.

In the future, OpenAI plans to expand Realtime’s capabilities to handle more types of input, including video. The pricing for the Realtime API is set at $5 per million input tokens and $20 per million output tokens for text processing. For audio, the cost is $100 per million input tokens and $200 per million output tokens. This translates to roughly $0.06 per minute of audio input and $0.24 per minute of audio output.

Conclusion

OpenAI’s new API updates offer developers powerful tools to improve their applications. With Model Distillation, Prompt Caching, Vision Fine-Tuning, and the Realtime API, developers can create more customized, efficient, and responsive AI-powered apps. These updates also show OpenAI’s commitment to making advanced AI technology more accessible, cost-effective, and useful across various industries. Whether it's making speech-based apps faster or helping smaller models perform better, OpenAI is giving developers the tools they need to push the boundaries of what AI can do.

Loading